Author: Chandler T Wilson

Things to consider when driving machine intelligence within a large organization

While modern day A.I. or machine intelligence (MI) hype revolves around big ”eureka” moments and broad scale “disruption”, expecting these events to occur regularly is unrealistic. The reality is that working with AI’s on simple routine tasks will drive better decision making systemically as people become comfortable with the questions and strategies that are now possible. And hopefully, it will also build the foundation for more of those eureka(!) moments. Regardless, technological instruments allow workers to process information at a faster rate while increasing the precision of understanding. Enabling more complex and accurate strategies. In short, it’s s now possible to do in hours what it once took weeks to do. Below are a few things I’ve found helpful to think about when driving machine intelligence at a large organization, as well as what is possible.

- Algorithm Aversion — humans are more willing to accept the flawed human judgment. However, people are very judgmental if a machine makes a mistake – even within the lowest margin or error. Decisions generated by simple algorithms are often more accurate than those made by experts, even when the experts have access to more information than the formulas use. For further elaboration on making better predictions, the book Superforecasting is a must read.

- Silos! The value of keeping your data/information a secret as a competitive edge does not outrun the value of potential innovation or insights if data is liberated within the broader organization. If this is possible build what I call diplomatic back channels where teams or analysts can sure data with each other.

- Build a culture of capacity. Managers are willing to spend 42 percent more on the outside competitor’s ideas. Leigh Thompson, a professor of management and organizations at the Kellogg School says. “We bring in outside people to tell us something that we already know,” because it paradoxically means all the wannabe “winners” in the team can avoid losing face”. It’s not a bad thing to seek external help, but if this is how most of your novel work is getting done and where you go to get your ideas you have systemic problems. As a residual, the organization will fail to build strategic and technological muscle. Which is likely to create a culture which emphasizes generalists, not novel technical thinkers in leadership roles. In turn, you end up with an environment where technology is appropriated at legacy processes & thinking – not the other way around (if you want to stay relevant). Avoid the temptation to outsource everything because nothing seems to be going anywhere right away. That 100-page power point deck from your consultant is only going to help in the most superficial of ways if you don’t have the infrastructure to drive the suggested outputs.

OSINT One. Experts Zero.

Our traditional institutions, leaders, and experts have shown to be incapable of understanding and accounting for the multidimensionality and connectivity of systems and events. The rise of the far-right parties in Europe. The disillusionment of European Parliament elections as evidenced by voter turnout in 2009 and 2014 (despite spending more money than ever), Brexit, and now the election of Donald Trump as president of the United States of America. In short, there is little reason to trust experts without multiple data streams to contextualize and back up their hypotheses.

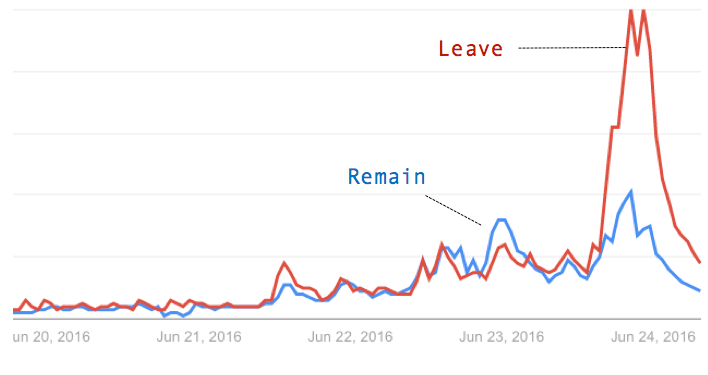

How could experts get it wrong? Frankly, it’s time to shift out of the conventional ways to make sense of events in the political, market, and business domains. The first variable is reimagining information from a cognitive linguistic standpoint. Probably the most neglected area in business and politics – at least within the mainstream. The basic idea? Words have meaning. Meaning generates beliefs. Beliefs create outcomes, which in turn can be quantified. The explosion of mass media, followed by identity-driven media, social media, and alternative media, is a problem, and we are at the mercy of media systems that frame our reality. If you doubt this, reference the charts below. Google Trends is deadly accurate in illustrating what is on people’s minds the most, bad or good, wins – at least when it comes to U.S. presidential elections. The saying goes bad press is good press is quantified here, as is George Lakoff’s thinking on framing and repetition (Google search trends can be used to easily see which frame is winning, BTW ).

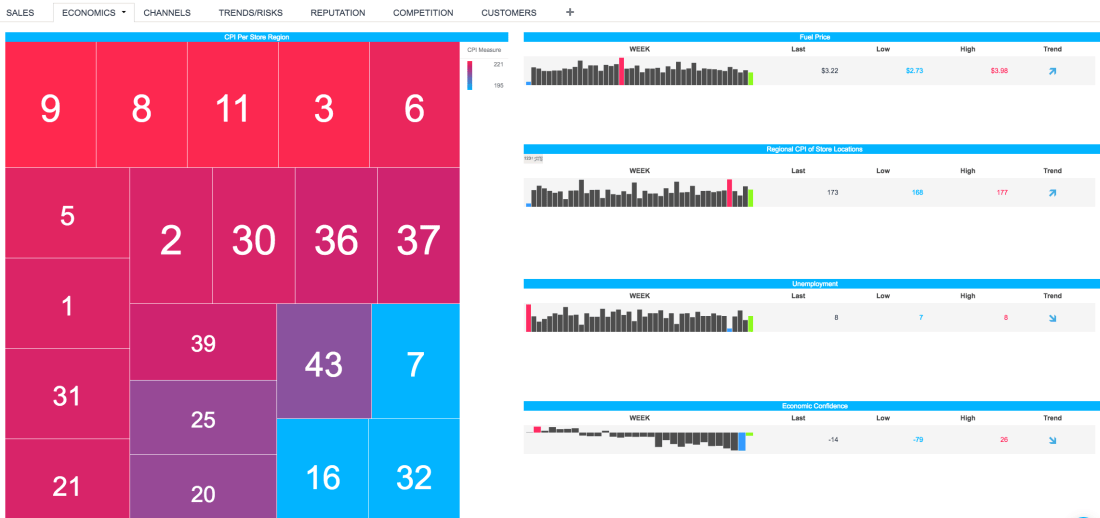

Within this system, there is little reason to challenge one’s beliefs, and almost nothing forces anyone to question their own. Institutions and old media systems used to be able to bottleneck this; they were the only ones with a soapbox, and information was reasonably slow enough. There is a need for unorthodox data, such as open-source intelligence (OSINT) and creativity, which traditional systems of measurement and strategy lack to outthink current information systems. To a fault, businesses, markets, and people strive for simple, linear, and binary solutions or answers. Unfortunately, complex systems, i.e., the world we live in, don’t dashboard into nice simple charts like the one below. The root causes of issues are ignored, untested, and contextualized, which creates only a superficial understanding of what affects business initiatives.

I know this may feel like a reach in terms of how all that is mentioned is connected, so more on OSINT, data, framing, information, outcomes, and markets to come.

Cheers, Chandler

The Brexit

While investors were in shock, open source signals such as Google trends often pointed to “Leave” predominating, illustrating expert and market biases. Perhaps they should work on integrating these unconventional data streams better (sorry couldn’t help it). The UK’s decision to exit from the EU is part of a larger global phenomenon that could have been understood better with open source, not just market, data.

The world is growing more complex. Information is moving faster. Humans have not evolved to retain or understand this mass output of (dis) information in any logical way. As a response, a retreat to simple explanations and self-censorship towards new ideas that might challenge one’s frame are ignored and become the norm. Populist decisions are made and embraced, oftentimes reactionary towards the establishment or elite. Multi-national corporations and elites will need to step outside of their bubble and take note of nationalist, albeit sometimes isolationist, such as Donald Trump, Bernie Sanders, President Erdogan of Turkey, Marie Le Pen’s Front National of France, Boris Johnson – Former Mayor of London and Brexit backer (good chance he takes David Cameron’s place), Germany’s AFD and the 5-star movement in Italy gain in both popularity and power.

In addition to the more media coverage, more people were associated with the leave campaign, which is an advantage. During a political campaign, choices and policy lines are anything but logical; they tend to fall on emotional lines, so institutional communications must have a noticeable figurehead, especially in the media age. It says something when the top people who are associated with Remain are Barak Obama, Janet Yellen, and Christine Lagarde. Note that David Cameron is more central with leave. Nonetheless, the pleas by political outsiders and institutions such as the IMF and World Bank for the UK to remain in the EU potentially caused damage to the “Remain” campaign. UK voters did not want to hear from foreign political elites. This is illustrated by the connection and proximity of the “Obama Red Cluster” to the French right-wing Forest Green cluster (and the results) below. The “Brexit” could lend credence to the possibility of EU exit contagion. There are very real forces in France (led by the Front National) and Italy (led by the 5 Star Movement (who just won big in elections) that are driving hard for succession from the EU and or, potentially, the Eurozone.

Ramifications:

- Banks (especially European ones) are fleeing to the gold market, seeking shelter from volatility. While this is to be expected, dividend-based stocks and oil would also be attractive to those seeking stability.

- Thursday’s referendum sent global markets into turmoil. The pound plunged by a record, and the euro slid by the most since it was introduced in 1999. Historically, the British Pound reached an all-time high of 2.86 in December of 1957 and a record low of 1.05 in February of 1985.

- Don’t count on US interest rate hikes. Yellen has expressed concern about global volatility on multiple occasions. The Brexit just added to that. The Bank of England could follow the US Fed and drop interest rates on the GBP to account for market uncertainty.

- If aggressive, European uncertainty could allow US companies to gain on European competitors. Due to the somber mood within Europe, companies could be more conservative with investment, leaving them vulnerable.

- Alternatively, Brexit may trigger more aggressive U.S. or global expansion by European Companies while the ramifications of Brexit are further understood.

- The political takeaway is that the Remain campaign was relatively sterile, with no figurehead or clear policy issues directly related to the EU. This was reflected by the diversity in associated search terms related to the “Leave” campaign. In addition, Angela Merkel, not an EU leader such as European Commission President Jean-Claude Junker, was once again seen as the de facto voice of Europe.

The relationship between humans, machines and markets

Technology has increased access to information, making the world more similar on a macro and sub-macro level. However, despite the increased similarity, research shows business models are rarely horizontal, emphasizing the importance of micro-level strategic consideration. Companies routinely enter new markets relying on knowledge of how their industry works and the competencies that led to success in their home markets while not being cognizant of granular details that can make the difference between success and failure in a new market. Only through machine-driven intelligence can companies address the level of detail needed in a scalable and fast manner to remain competitive.

Furthermore, machine intelligence and information have led to the rapidly diminishing value of expertise and eroding the value of information. The expertise needed to outrun or beat machine intelligence has exponentially increased yearly. Over the next one to two years, the most successful companies will accept the burden of proof that they have switched from technologies and AI to human expertise. Furthermore, machines will come to reframe what business and strategy mean. Business expertise in the future will be the ability to synthesize and explore data sets and create options using augmented intelligence – not being an expert on a subject per se. The game changers will have the fastest “information to action” at scale.

A residual of that characteristic makes a “good” or “ok” decision’s value exponentially highest at the beginning – and oftentimes much more valuable than a perfect decision. To address this trend, organizations must focus on developing processes and internal communication that foster faster “information-to-action” opportunity cost transaction times, similar to how traders look at financial markets. Those margins of competitive edge will continue to shrink but will become exponentially more valuable.

Why businesses harnessing AI and other technologies are leading the way

Studies show experts consistently fail at forecasting and traditionally perform worse than random guessing in businesses as diverse as medicine, real estate valuation, and political elections. This is because people traditionally weigh experiences and information in very biased ways. In the knowledge economy, this is detrimental to strategy and business decisions.

Working with machines enables businesses to learn and quantify connections and influence in ways humans cannot. An issue is rarely isolated to the confines of a specific domain, and part of Walmart’s analytics strategy is to focus on key variables in the context of other variables that are connected. This can be done in extremely high resolution by taking a machine-based approach to mining disparate data sets, ultimately allowing flexibility and higher-resolution KPIs to make business decisions.

The effects of digital disintermediation and the sharing economy on productivity growth

Machines have increased humans’ ability to synthesize multiple information streams simultaneously, as well as our ability to communicate these insights, which should lead to a higher utility on assets. In the future, businesses will likely have to be more focused on opportunity cost and re-imagine asset allocation with increased competition due to lower barriers to entry. Inherently, intelligence and insights are about decisions. A residual of that characteristic makes a good or ok decision’s value exponentially highest in the beginning – and oftentimes more valuable than a perfect decision. To address this trend, organizations must focus on developing processes and internal communication that foster faster “information-to-action” transaction times, much like how traders look at financial markets.

Is this the beginning of the end?

Frameworks driven by machines will allow humans to focus on more meaningful and creative strategies that cut through the noise to find what variables can be controlled, mitigating superficial processes and problems. As a result, it is the end for people and companies that rely on information and routine for work. And the beginning for those who can solve abstract problems with creative and unorthodox thinking within tight margins. Those who do so will also be able to scale those skills globally with advancements in communication technology and the sharing economy, which will considerably speed up liquidity on hard and knowledge-based assets.

Next Generation Metrics

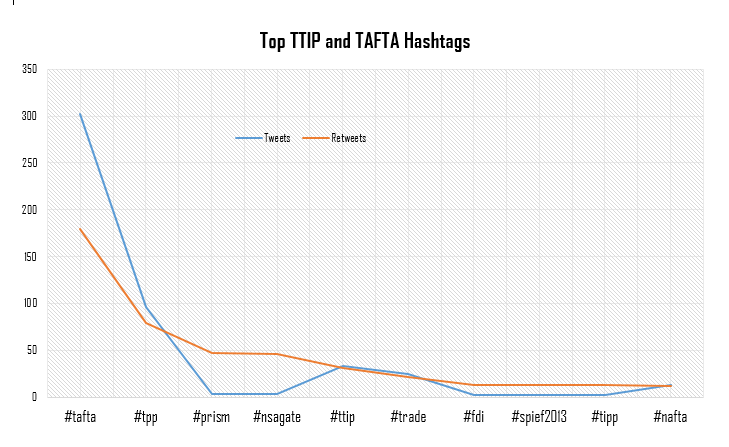

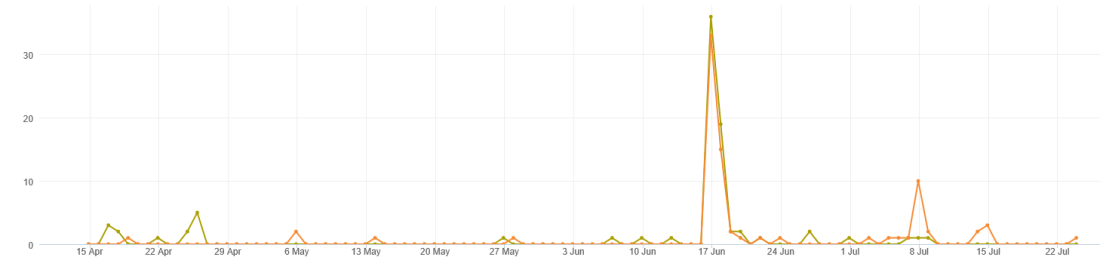

Recently I’ve been thinking of ways to detect bias as well as look into what makes people share. Yes understanding dynamics and trends over time, like the chart below (Topsy is a great simple, free easy tool to get basic Twitter trends), can be helpful – especially with linear forecasting. None the less they reach their limits when we want to look for deeper meaning – say at the cognitive or “information flow” level.

Enter networks. This enables an understanding of how things connect to and exist at a level that is not possible to do with standard KPIs like volume, publish count and sentiment over time. Through mapping out the network based on entities, extracted locations, and similar text and language characteristics it’s possible to map coordinates of how a headline, entities or article exists and connects to other entities within the specific domain. In turn, this creates an analog of the physical world with stunning accuracy – since more information is reported online every day. For example, using to online news articles and Bit.ly link data, I found articles with less centrality (based on the linguistic similarity of the aggregated on-topic news article) to their domain, which denote variables being left out (of the article), typically got shared the most on social channels. In short, articles that were narrower in focus, and therefore less representative of the broader domain, tended to be shared… This is just the tip of the iceberg.

The European Parliament this week

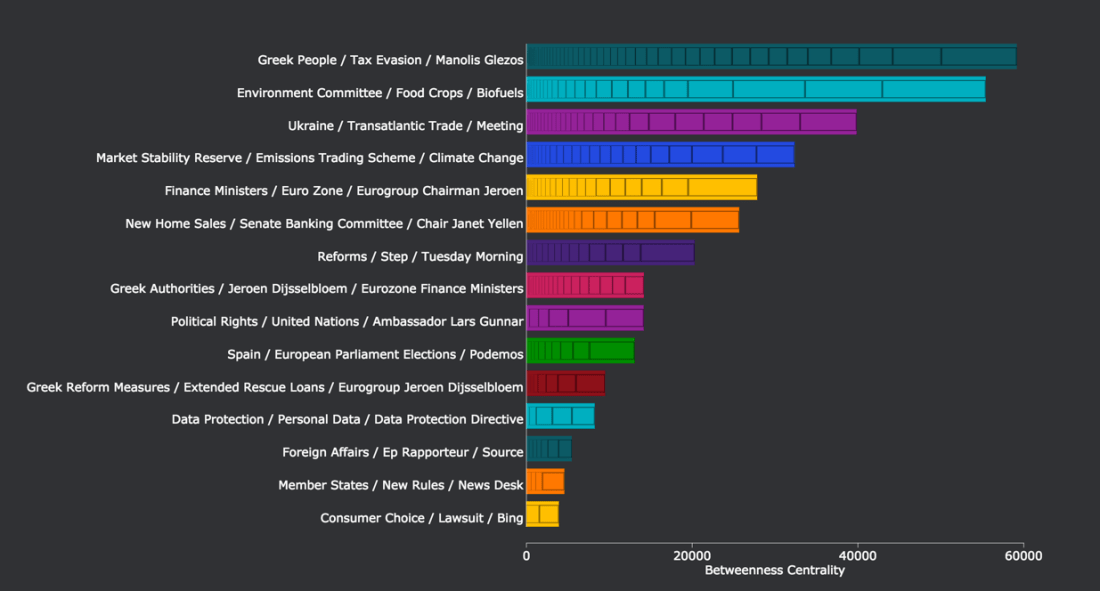

With the Greece/Eurozone situation reaching the brink, I decided to take a look at what’s been happening over the past week within the European Parliament domain.

Some takeaways after I extracted the top people and issues:

- The Eurozone, Yellen’s decision to be patient with the Fed and housing markets are interlinked (not a surprise), indicating US businesses should be mindful of the Greek debt restructuring. This has affected foreign exchange markets and the domestic retail sector a bit.

- Outside of global finance and the Greek debt restructuring, the European Parliament’s decision to back new limits for food based bio-fuels was the most embedded policy instance. Thomas Nagy, EVP at Novozymes and the most central person to the policy, had this to say: “A stable and effective framework is the only way forward to secure commercial deployment”.

- Climate change and carbon trading (to be reformed in 2018) were most central to the new policy, as well as the EU plans to merge energy markets, which ALDE feels “will be a nightmare for Putin” and weaken Russian grip on Europe’s energy needs.

The bar chart below shows which topics within the European Parliament domain are associated with each person. The people are represented by the colors from the network graph above. It’s in hierarchical form based on centrality (to the European Parliament).

Something to be cognizant of: Data Protection

While the issues are on the edge of EU affairs, as indicated at the top left and bottom right of the network graph below (highlighted in the teal green and dark red), data issues are becoming embedded within the broader scope of the EU Parliament. Note that the range is across network centrality. This indicates that policies will have to be negotiated within a multitude of domains.

Stewart Room, a partner at PwC Legal, warns “businesses that are waiting for the EU General Data Protection Regulation (GDPR) before taking action have already missed the boat.” I agree. Companies will need to be cognizant of the European Union’s penchant to regulate data and technology, often before understanding it.

Multi-sector coalitions have to be built. Framing legislation within global policy that is both considerate to business efficiency, yet empathetic to consumer concerns for privacy, could help avoid the backlash that ACTA and SOPA felt. Data means too much to business. If not addressed in a thoughtful way, it could end up being the Trojan horse to TTIP and TPP and push those negotiations, and therefore economies, backwards.

Isn’t it cool how we can mine and extrapolate information from open source data for strategic intelligence? Much more contextual than Googling everything. Also it illustrates just how interlinked the world has become.

-CT

European Union + OSINT

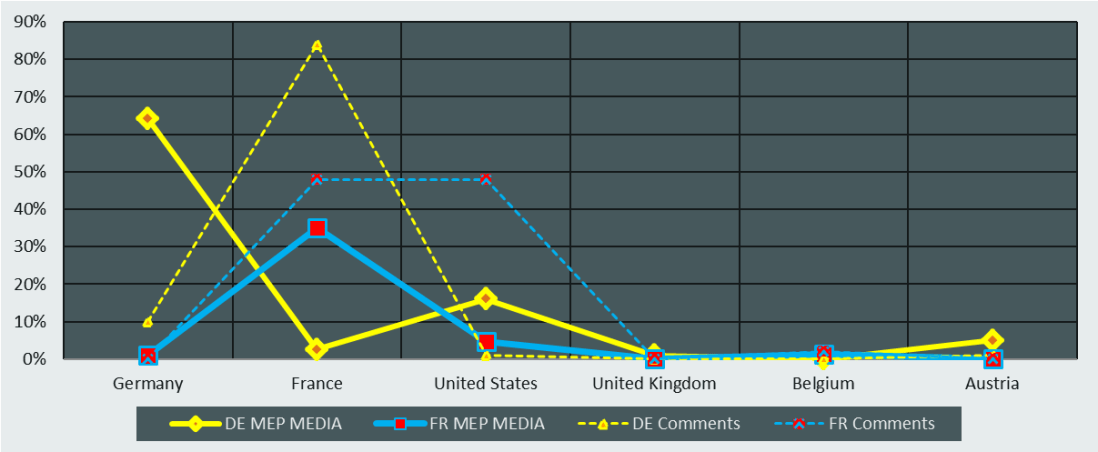

Germany is, without question, the most powerful and important member state in the EU. Everything rides towards, through, and with Berlin. With that in mind, and since Germans have been typically disengaged in EU Affairs, I decided to take a quick look at European Parliament election hashtags (#EP2014, #EU14, and #EU2014) to see which member states are engaging and which ones are not.

A few years back, I worked for the EPP Group, Europe’s largest political party. The future power of Germany was obvious at the time since it was right in the middle of the shit-storm of the Euro-crisis. I started to see how the EPP’s efforts to communicate with the Germans were going. I found out from an analysis of online media that Germans weren’t at all engaged with the EU or their MEPs. The French actually commented on German EPP MEP media more than the Germans. Regarding French EPP MEPs, Germany didn’t return the love. Who did? Believe it or not, the people in the US, keeping a close eye on the global markets, accounted for about 49% of French EPP MEP content comments. Who says US citizens are close-minded? At the time, I felt that comments were a decent KPI to show some interest and engagement, especially since it took a bit more effort – online and social media weren’t as widely adopted. Below is the chart I showed my colleagues at the EPP about 3 years ago. This was before the “big data” hype machine.

Seeing the data, the EPP Group should have focused on changing this deficit aggressively and coordinatedly. You just can’t have a strong EU without an engaged Germany.

Fast-forward 3 years. The EU Parliament elections are coming up. The EU institutions are being questioned, and referendums to withdraw have become focal points in the UK. And there’s lots of money backing this idea. Is this completely because of a disengaged Germany? Not at all. Some of it is just far-right jargon inherent to a bad economy that leaders have very little control over. However, it still makes a big difference when the most powerful member states are apathetic and disengaged.

In the present day, 2014, not much has changed. Although hash-tags are probably not the most precise KPIs and leave a bit to be desired (I needed something quick), the data shows that in proportion to German MEP market share, Germany is still the most disengaged country when it comes to mentioning on EU election-specific hash-tags. What was telling was that Greece had just 62 fewer mentions than Germany, despite Germany being about seven times the size. In fact, only five member states were a net positive (including Greece). Belgium ranked first, obviously, because Brussels is home to most of the EU institutions. The “Brussels Bubble” is alive and well, even though this time it was supposed to be different.

In politics, getting people mad often drives engagement. This sometimes drives voting rates to the parties that many deem unreasonable (Vlaams Belang, Front National, etc). For example, Mr. Farage’s Euro skeptic party, UKIP, has utterly dominated communications compared to the other European Parliament political groups. This isn’t a good thing if you note that the winners usually have more volume of mentions. Surely, Europe is stronger and more competitive together than it is fragmented. Nonetheless, if mainstream parties can’t even figure out how to communicate and run a proper campaign, why should they be trusted with leadership?

These deficits could have and should have been addressed years ago. You could see them coming from miles away, yet those in power ignored the data or just made half-hearted and superficial efforts to save face. It’s not always easy to lead, and no one expects it to be, but at some point, you have to make bold moves, seek out candor, and take a look in the mirror.

The German election and EU political communications

For this post, I decided to remain old school and mainly rely on search data. It’s pretty basic, but typically offers a great view of what people are interested in. Google’s market share is around 90% in Europe and it’s the most visited site in the world. In my opinion, Google Trends is the largest focus group in the world.

First I looked at the overall interest in Germany between Angela Merkel and Peer Steinbrück, as well as their political affiliations – the CDU and PSD. Initially, I was curious as to how party identity interest compared to interest in the politician. To anchor this chart I did the same with US presidential campaign (the chart below). I have a hunch, and the data seems to be telling me thus far, that the more media-oriented politics becomes (along with everything else in the world), the more important celebrity, authenticity, and individuality becomes. Take a look at this recent brand analysis done by Forbes. Chris Christie wins, having the highest approval rating of over 3,500 “brands” according to BAV (awesome company) at 78%. For those that don’t know, Christie is probably the most straight forward tell-it-like-it-is politician in the country.

So what can we learn from the Google search interest shown below?

- Politics is still about sheer volume and name recognition. For those that think being novel and unique achieves victory over blasting away nonstop in a strategically framed and coordinated way, think again. People tune out if they aren’t interested. Irrelevance is almost always worse than bad PR or sentiment (excluding a case like Anthony Weiner). You simply don’t win if you don’t interest people. If people aren’t talking about you, you’re not interesting. Merkel had more search interest than Steinbrück and over the course of the year probably got 10,000 times more airtime, both good and bad, due to her large role in the euro crisis. In short, repetition is king.

- Framing and consistent language strategy is vital. Volume can be shown to equate with recognition of a person, but this can easily enough be analogized to a policy or issue. Give me a choice between a clever social media strategy or consistent language strategy, meaning all the key issues are repeated by the party and coordinated as much as possible, and I’ll take the language strategy any day. It’s amazing how just being consistent in political communications is overlooked by companies and political leaders in Europe. Social media tends to be a framing conduit, not the reason people mobilize or have opinions.

- The world is growing ever more connected. Look at how global the reporting of the German election was. Obviously, its importance was higher due to Germany’s rising influence, but none the less the amount of sources from all over the world is impressive. A note for the upcoming EU elections: don’t forget to target the USA and other regions to influence specific regions in Europe. A German constituent might read about a policy from the Financial Times, a Frenchman the Wall Street Journal or an American based in Brussels, who knows Europeans who can vote, Bloomberg.

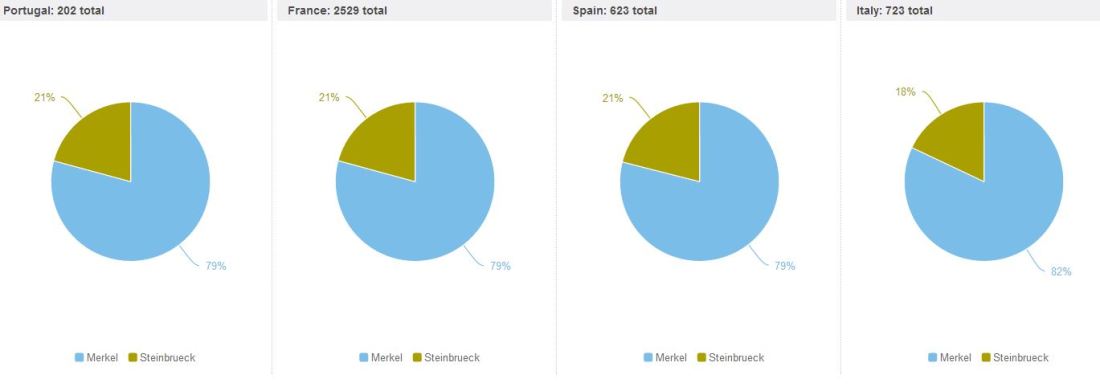

I decided to throw in Twitter market share of the candidates from August 21st to September 21st, the day prior to elections. I found it interesting to see how closely Belgium and the United State reflect Germany, probably because these countries are looking at the elections from more of a spectator view. Meanwhile, southern Europe, which had a vested interest in the election, was pretty much aligned. France, Spain, and Italy seem to report a bit more, and in a similar way, on Merkel – probably due to sharing the same media sources. Unfortunately, I don’t have the time to look into this pattern too much at the moment, but it’s something I’ll continue to think about in the future.

I decided to throw in Twitter market share of the candidates from August 21st to September 21st, the day prior to elections. I found it interesting to see how closely Belgium and the United State reflect Germany, probably because these countries are looking at the elections from more of a spectator view. Meanwhile, southern Europe, which had a vested interest in the election, was pretty much aligned. France, Spain, and Italy seem to report a bit more, and in a similar way, on Merkel – probably due to sharing the same media sources. Unfortunately, I don’t have the time to look into this pattern too much at the moment, but it’s something I’ll continue to think about in the future.

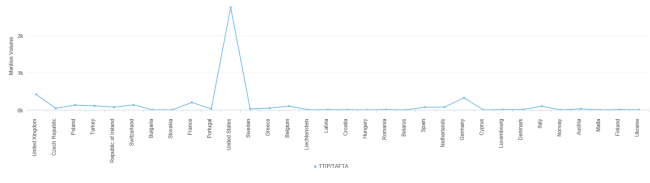

Breakdown of TTIP and TAFTA

TTIP/TAFTA is a true game changer for both the EU and US in terms of economic value, especially in a time of crisis for the EU. To find out exactly what content people consumed and analyze policy trends, we mined the web (big data). At the moment TTIP/TAFTA is not being met without issues – as we all know in Brussels – #NSAGate, data privacy and IP are slowing down negotiations (we’re looking at you France), and this is generally what the data had to say as well.

Over view: 5,505 mentions of TTIP/TAFTA in the last 100 days – too large of number for business and institutions to ignore. In short you need to join the conversation if you have something to say about it ASAP (indecision is a decision).

The biggest uptick – a total of 300 mentions – came when Obama spoke at the G-8 summit in Ireland on June 17th when trade talks began. The key theme at this time was the potential boost in the economy. The official press release is here.

“The London-based Centre for Economic Policy Research estimates a pact – to be known as the Transatlantic Trade and Investment Partnership – could boost the EU economy by 119 billion euros (101.2 billion pounds) a year, and the U.S. economy by 95 billion euros.However, a report commissioned by Germany’s non-profit Bertelsmann Foundation and published on Monday, said the United States may benefit more than Europe. A deal could increase GDP per capita in the United States by 13 percent over the long term but by only 5 percent on average for the European Union, the study found.”

Given that there is conflicting information we wanted to see whose idea and data wins out – the Centre for Economic Policy Research (CEPR) or Bertelsmann Foundation (BF)? To do this we looked to see which study was referenced most. The chart below shows the mentions of each organization within the TTIP/TAFTA conversation over the last 100 days. The Center for Economic Policy Research is in orange and the Bertelsmann Foundation is in green.

In total both studies were cited almost the same amount:

- Centre for Economic Policy Research: 80 Mentions

- Bertelsmann Foundation: 83 mentions

- Both organizations were mentioned together 53 Times.

More recently though the trend seems to show that the Economic Policy Research is being cited most in the last 30 days, including a large uptick on July 8th. This is mainly due to the market share of the sources being located more in the US and the US wanting to get a deal done faster than the more hesitant Europeans. Keep in mind the CEP claims larger benefits of TAFTA/TTIP than the BF study.

Where are the mentions?

- The US had 2,743 mentions (49% overall)

- All of Europe combined total was 1,986 (36% overall)

Of the topics ACTA is still being talked about with, IP and Data Protection top the list. This is not surprising given France’s reluctance to be agreeable because of the former and #prism, so below are those themes plotted.

The top stories on Twitter are in the table below. It’s not surprising that the White House is number one, but where are the EU institutions and media on this?

| Top Stories | Tweets | Retweets | All Tweets | Impressions |

| White House | 37 | 15 | 52 | 197738 |

| Huff Post | 26 | 0 | 26 | 54419 |

| Forbes | 23 | 0 | 23 | 1950786 |

| JD Supra | 15 | 2 | 17 | 42304 |

| Wilson Center | 12 | 7 | 19 | 72424 |

| 12 | 0 | 12 | 910 | |

| Italia Futura | 8 | 0 | 8 | 16640 |

| BFNA | 7 | 0 | 7 | 40246 |

| Citizen.org | 7 | 13 | 20 | 69356 |

| Slate | 7 | 0 | 7 | 13927 |

Everybody knows the battle for hearts and minds of people starts with a good acronym so I broke down the market share between TTIP (165) and TAFTA (2197):

I may add more in the coming days but those are a few simple bits of info for now. Nonetheless if you want to join the conversation on Twitter the top hashtags are below.